AI-Powered Educational Innovation

Eureka Labs aims to transform education through the integration of AI, offering innovative and personalized learning experiences. Their primary focus is to combine human expertise with generative AI, creating an AI-native environment that enhances the educational process.

This is the latest project from Andrej Karpathy, AI educator extraordinaire.

Andrej Karpathy is a prominent figure in artificial intelligence, widely recognized for his contributions to deep learning and computer vision. After earning his Ph.D. from Stanford University, where he focused on computer vision under Fei-Fei Li, Karpathy has made significant strides in neural network research, particularly in image and video recognition. Beyond his technical achievements, Karpathy is deeply committed to AI education. He is well-known on YouTube and other platforms for his accessible and insightful tutorials, helping to demystify complex AI concepts for a broad audience. His educational content has played a crucial role in training the next generation of AI researchers and practitioners.

In addition to his educational efforts, Karpathy has worked extensively on autonomous driving technologies, developing advanced AI models that process and interpret vast amounts of visual data in real-time. He is also a strong advocate for ethical AI, emphasizing the need for transparency and safety in AI systems. As a thought leader, Karpathy frequently shares his vision for the future of AI at conferences and through his online presence, inspiring and guiding the AI community towards responsible and innovative advancements.

AI Teaching Assistant

Eureka Labs leverages AI to support teachers by providing scalable, personalized tutoring. The AI can deliver multilingual support, answer student questions, and offer explanations, ensuring that each student receives individualized attention. This approach allows teachers to focus on creating high-quality content while the AI handles repetitive tasks and provides consistent support to students.

LLM101n - The Flagship Course

Course Overview

LLM101n is Eureka Labs' first product, designed as an undergraduate-level course to teach students how to train their own language models. The course is offered online and features both digital and physical cohorts, enabling a comprehensive learning experience.

Course Features

- Practical Training: Students learn by doing, engaging with hands-on projects that involve training and fine-tuning AI models.

- Accessible Learning: The course is available online, making it accessible to a global audience.

- Community Support: Digital and physical cohorts facilitate peer interaction, fostering a collaborative learning environment.

Benefits of AI Integration in Education

- Scalability: AI enables the education system to cater to a large number of students simultaneously without compromising on quality.

- Personalization: Tailored learning paths ensure that each student can progress at their own pace, addressing individual strengths and weaknesses.

- Accessibility: AI provides support in multiple languages and is available 24/7, breaking down barriers to education.

Complex Topics in Each Chapter

Chapter 01: Bigram Language Model (Language Modeling)

A bigram language model predicts the probability of a word based on the preceding word, using pairs of consecutive words (bigrams). This helps in understanding and generating text sequences by capturing local dependencies. The model is foundational in learning basic statistical language patterns.

Chapter 02: Micrograd (Machine Learning, Backpropagation)

Micrograd involves building an automatic differentiation library from scratch to understand gradient computation. This includes implementing backpropagation, crucial for training neural networks. The focus is on the mathematical underpinnings and coding techniques for optimization.

Chapter 03: N-gram Model (Multi-layer Perceptron, Matmul, GELU)

The n-gram model extends language modeling to consider sequences of 'n' words, improving context understanding. Multi-layer perceptrons (MLPs) enhance model complexity by stacking layers of neurons. Matrix multiplication (matmul) and GELU activation functions are used for efficient computations and smoother learning curves.

Chapter 04: Attention (Attention, Softmax, Positional Encoder)

Attention mechanisms allow the model to focus on relevant parts of the input sequence, improving performance on tasks like translation. Softmax functions are used within attention layers to assign weights to different input segments. Positional encoding adds sequence order information to the model, crucial for processing sequential data.

Chapter 05: Transformer (Transformer, Residual, Layernorm, GPT-2)

Transformers revolutionize language models by processing entire sequences in parallel rather than sequentially. Residual connections and layer normalization help in stabilizing and accelerating the training of deep networks. The architecture of GPT-2 exemplifies these principles, showcasing state-of-the-art text generation capabilities.

Chapter 06: Tokenization (minBPE, Byte Pair Encoding)

Tokenization converts text into smaller units (tokens) that can be processed by models, with minBPE being a minimal byte pair encoding technique. This helps in handling out-of-vocabulary words and reduces the input size. Efficient tokenization is crucial for managing large text corpora and improving model performance.

Chapter 07: Optimization (Initialization, Optimization, AdamW)

Effective optimization techniques are vital for training deep learning models, with initialization strategies setting the stage for convergence. AdamW is an advanced optimization algorithm that combines the benefits of Adam with weight decay regularization. These techniques ensure faster and more stable training processes.

Chapter 08: Need for Speed I: Device (Device, CPU, GPU)

Optimizing for different devices, such as CPUs and GPUs, can significantly speed up model training and inference. Understanding hardware capabilities and choosing the right device for specific tasks is crucial. Techniques include parallel processing and leveraging GPU acceleration for intensive computations.

Chapter 09: Need for Speed II: Precision (Mixed Precision Training, fp16, bf16, fp8)

Mixed precision training uses lower precision (e.g., fp16, bf16, fp8) to speed up computations and reduce memory usage without sacrificing accuracy. This involves dynamically adjusting precision levels during training. Such techniques enhance performance on compatible hardware like GPUs and TPUs.

Chapter 10: Need for Speed III: Distributed (Distributed Optimization, DDP, ZeRO)

Distributed optimization strategies, such as Distributed Data Parallel (DDP) and ZeRO, enable scaling model training across multiple devices. These methods help manage large model parameters and datasets by distributing workloads. Efficient communication and synchronization between devices are key to achieving faster training times.

Chapter 11: Datasets (Datasets, Data Loading, Synthetic Data Generation)

Handling large datasets efficiently involves strategies for data loading and preprocessing to ensure smooth training workflows. Synthetic data generation can augment datasets, providing more training examples. These techniques are crucial for improving model robustness and generalization.

Chapter 12: Inference I: kv-cache (kv-cache)

Key-value caching (kv-cache) mechanisms store intermediate results during model inference, reducing redundant computations. This improves the efficiency and speed of generating predictions. Effective caching strategies are essential for deploying models in real-time applications.

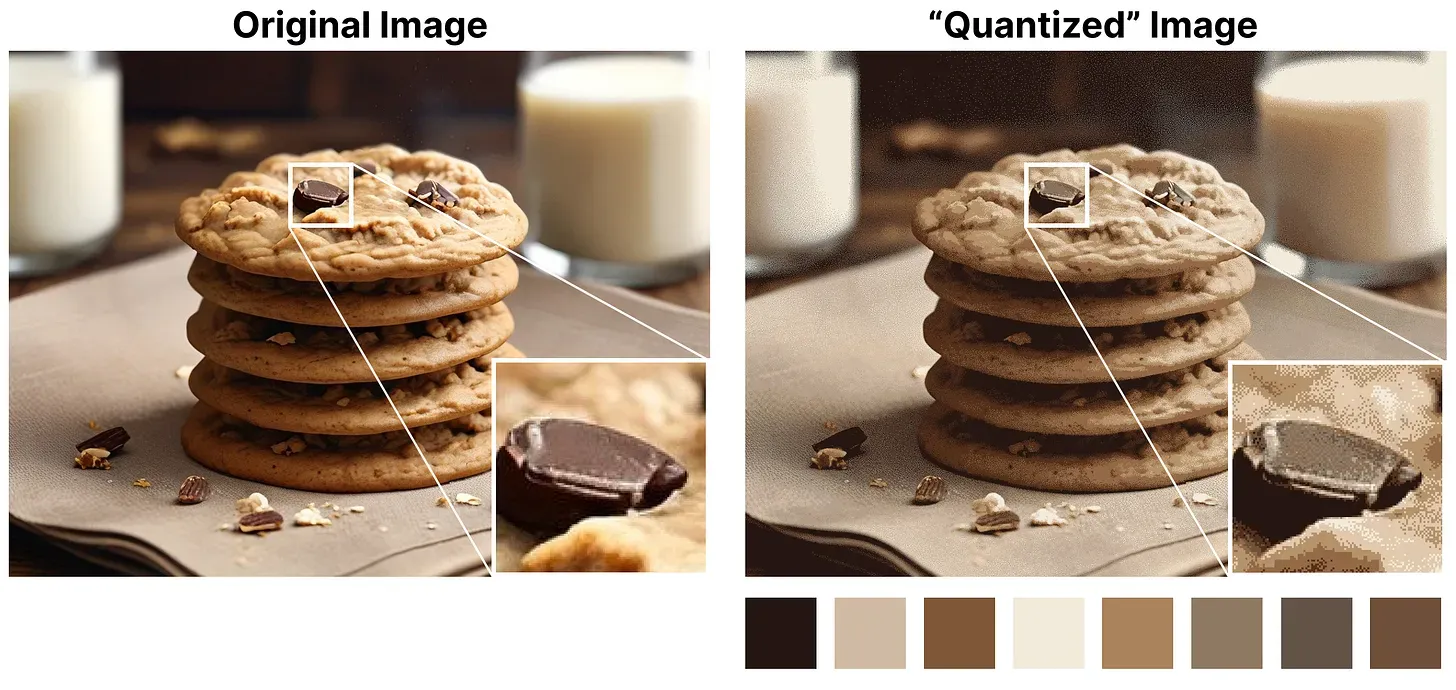

Chapter 13: Inference II: Quantization (Quantization)

Quantization reduces the precision of model weights and activations, lowering memory usage and accelerating inference. This technique is particularly useful for deploying models on resource-constrained devices. It balances the trade-off between model size and performance.

Chapter 14: Finetuning I: SFT (Supervised Finetuning SFT, PEFT, LoRA, Chat)

Supervised fine-tuning (SFT) adapts pre-trained models to specific tasks using labeled data. Techniques like Parameter-Efficient Fine-Tuning (PEFT) and Low-Rank Adaptation (LoRA) optimize the process. These methods are essential for creating specialized chat applications and improving model accuracy on targeted tasks.

Chapter 15: Finetuning II: RL (Reinforcement Learning, RLHF, PPO, DPO)

Reinforcement learning fine-tuning methods, such as Reinforcement Learning from Human Feedback (RLHF) and Proximal Policy Optimization (PPO), enhance model performance through interaction with the environment. These techniques are used to refine model behavior in dynamic scenarios. They are crucial for developing robust and adaptive AI systems.

Chapter 16: Deployment (API, Web App)

Deploying models involves creating APIs and web applications to make AI functionalities accessible to users. This includes designing interfaces for easy integration and usage. Efficient deployment strategies ensure that models can serve predictions reliably and at scale.

Chapter 17: Multimodal (VQVAE, Diffusion Transformer)

Multimodal models integrate different data types (e.g., text, images, audio) using techniques like Vector Quantized Variational Autoencoders (VQVAE) and diffusion transformers. These models can handle diverse inputs, making them versatile for various applications. Advanced architectures enhance the capability to process and generate complex multimodal data.

Competitive Edge

Eureka Labs differentiates itself from competitors by effectively integrating AI into the learning process. This approach not only improves the efficiency of education but also makes learning more engaging and effective. The AI provides instant feedback, keeps students motivated, and helps them understand complex concepts through interactive sessions.

Future Prospects

Eureka Labs plans to expand its offerings by developing more courses and tools that utilize AI to enhance learning. By continuously improving their AI models and incorporating feedback from users, they aim to stay at the forefront of educational innovation.

Key Takeaways

- AI and Human Synergy: Eureka Labs combines the strengths of AI and human expertise to create an optimized learning environment.

- Practical AI Education: Courses like LLM101n equip students with practical skills in AI model training.

- Inclusive Education: AI-powered solutions ensure education is accessible, scalable, and personalized.

For more information, visit Eureka Labs.