We can start with a very quick overview of what serverless inference even means for those just learning about the different levels of infrastructure for AI. Serverless inference is an approach that removes the burden of managing the hardware infrastructure required to deploy and scale AI models. Traditionally, developers would need to provision, configure, and maintain servers or cloud resources to ensure their models run efficiently, especially as demand fluctuates. Let's explore what it takes to get an AI or ML system off the ground and running in 2024.

The 3 Options for Deploying AI and ML Models in 2024

It's just as important to be aware of what we are avoiding when we make the choice to go serverless. Just because we aren't managing it doesn't mean the hardware doesn't exist. If you want to skip this part, you can go directly to the section on serverless inferencing below.

Let’s dive into three main options for anyone trying to run AI today: on-premise hardware, dedicated compute resources, and serverless computing. We’ll explore when and why serverless might be the perfect choice for your needs.

On-Premise Hardware

This is where you buy and maintain your own physical servers. It’s like setting up a workshop in your garage—you have full control, but you also have to deal with all the maintenance and repairs. This option requires a big investment upfront and ongoing care. Plus, if you need to scale up (like when your startup or department grows), it can be slow and costly. Running the latest and greatest NVIDIA hardware often involves having made the decision to pre-purchase it six months to a year in advance.

Dedicated Compute Resources

Dedicated resources, like virtual machines or reserved instances in the cloud, offer flexibility and scalability without the hassle of owning physical servers. Think of it as renting an office space—you don’t have to worry about the building itself, but you still need to manage your office setup. You have more control than with serverless, but you also need to watch out for overprovisioning, which can lead to unnecessary costs.

Serverless Computing

Serverless takes a lot of the heavy lifting off your plate. The cloud provider handles the infrastructure for you—scaling up or down as needed. You only pay for what you use, so there’s no wasting money on unused resources. It’s like using a co-working space where everything’s taken care of—you just show up and get to work.

What is Running My AI in 2024?

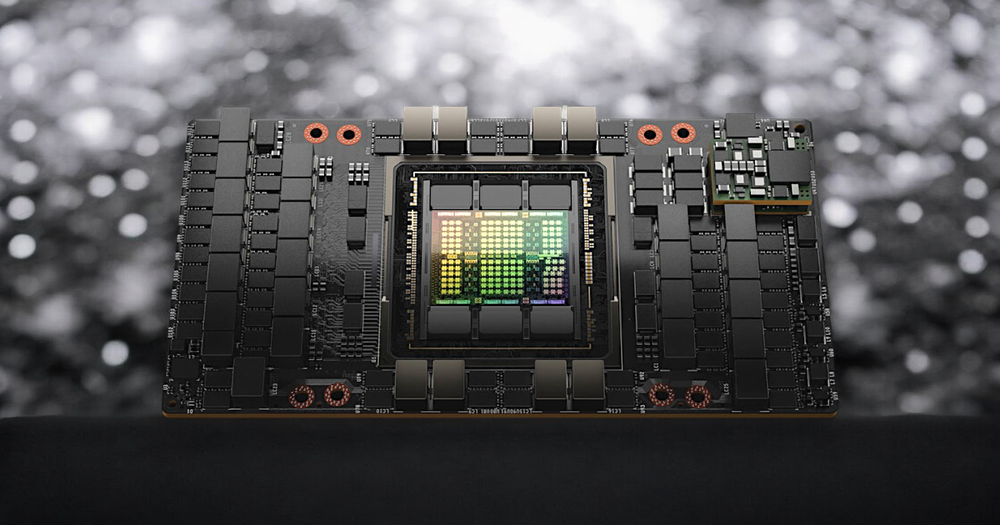

A key component of AI inference is the use of GPUs and accelerated systems, often provided by industry leaders like NVIDIA. These GPUs, including the notably popular NVIDIA H100 GPU which has a street price north of $30,000 per card, are essential for handling the heavy computational demands of AI models, particularly deep learning tasks.

This card in particular has caught the attention of venture capitalists who hoard them for their portfolio companies, teams at OpenAI and Meta, and is considered one of the core building blocks of AI model development. It also can be overkill for certain tasks where a smaller and more affordable L40S or A100 could do the job.

When you go serverless, you are making a conscious decision to avoid managing this aspect of high performance computing, which is a world where you don't need to spend months sourcing and building datacenters for these intensely sought-after GPU resources. In a serverless development setup, the cloud provider typically manages the allocation of these powerful (and expensive) GPUs, ensuring that your models have the necessary resources to perform optimally without you needing to devote attention to supporting and paying for the underlying hardware, twenty-four hours a day, seven days a week.

What is Serverless Inference?

In a serverless environment, these hardware and scale level tasks are abstracted away. The cloud provider automatically handles resource allocation, scaling, and maintenance based on the workload. This means that developers can focus solely on building and optimizing their models, deploying them without worrying about underlying hardware or software management.

With serverless inference, you only pay for what you use, so you’re not wasting money on resources sitting idle. Operations are also simplified because the provider takes care of server management, updates, and scaling, freeing up your time. This approach speeds up deployment. You can launch models quickly without taking the time to set up and manage the hardware, making the development process much more agile.

Serverless inference comes with several major advantages for developers in every stage of product development. First, it handles automatic scaling, meaning the system automatically adjusts to meet demand, whether there’s a trickle of requests or a flood. This keeps performance steady without the need to invest in "right sizing" your hardware or dedicated compute. Going serverless allows you to quickly and cheaply scale up, which in turn allows you to make costly hardware decisions with a better sense of actual usage. This cost efficiency makes serverless particularly interesting for startups or companies launching their first AI use case.

Let’s break down what serverless inference entails, why it’s significant, and how it works in practice. Here’s a step-by-step overview of how serverless inference typically works:

- Model Training: The machine learning model is trained on a dataset using various algorithms, which can happen locally or in the cloud. After training, the model is serialized into a format like TensorFlow SavedModel, ONNX, or a custom format.

- Model Deployment: The trained model is then deployed to a cloud storage service (e.g., Amazon S3) or bundled directly with the serverless function. The model can be accessed by the function whenever it is invoked.

- Function Invocation: When a request is made (e.g., an API call to predict housing prices), the serverless function is triggered. It retrieves the model if necessary, processes the input data, runs the inference, and returns the output.

- Scaling and Management: The serverless platform handles all scaling based on the number of incoming requests. If there’s a spike in demand, it automatically provisions more compute resources to handle the load.

- Response: The serverless function processes the request and sends back the prediction to the client.

Challenges in Serverless Inference in 2024

It isn't all roses—there are some issues to be aware of with serverless as well. While serverless inference offers impressive benefits in scalability and cost management, it also brings a set of challenges that developers must address. In 2024, these obstacles, ranging from latency concerns to resource constraints, highlight the complexities of adopting this approach in AI deployment. Understanding these challenges is absolutely necessary if you want to save money and time with serverless inferencing.

- Cold Start Latency: One of the most commonly cited challenges with serverless inference is cold start latency, where there is a delay when a serverless function is invoked after being idle. This can be a significant issue for latency-sensitive AI applications, such as real-time bidding in ad tech or live fraud detection in financial services. Fortunately this is one of the most rapidly advancing areas in the AI space, and innovative solutions are available to speed this process up. In a typical serverless inference scenario with a warm start, the total time delay is often around 100 to 300 milliseconds. However, with a cold start or a complex model, the delay can stretch to 500 milliseconds to a few seconds.

- Complexity in Monitoring: Serverless architectures can be difficult to debug and monitor, especially as they scale. This complexity arises from the distributed nature of serverless applications, where functions are stateless and often short-lived. Advanced monitoring tools like New Relic and OpenTelemetry are very important to use to understand user behavior and model future operations.

- Resource Limits: Serverless platforms impose certain limits on resources such as memory, execution time, and storage. These limits can be restrictive for large AI models or long-running inference tasks. While these constraints are generally sufficient for smaller models, they may require optimization or even the use of specialized serverless platforms for more demanding tasks. Unfortunately this problem never goes away, and while your stack is exclusively serverless, this is the easiest this will ever get.

Big Names in Serverless Inference in 2024

The buzz around serverless inference has caught the attention of some major players in the tech world, beyond the usual suspects of Amazon, Google, and Microsoft. Recently, NVIDIA and Hugging Face teamed up to change how AI models are deployed. This collaboration is set to make it easier and faster to run AI workloads without the hassle of actually owning AI hardware. For those who are unfamiliar with Hugging Face, it has become the de facto hub for new AI model release, deployment, rankings, and information today.

NVIDIA and Hugging Face Team Up

In their recent partnership, Hugging Face’s powerful AI models are being paired with NVIDIA’s DGX Cloud, which is a high-performance AI cloud service. This combo lets developers deploy large AI models without worrying about the nuts and bolts of traditional infrastructure. Essentially, you get the best of both worlds—top-tier AI models running on cutting-edge hardware—without the usual headaches of setting it all up. The consequences of this are you now have one-click inference deployment with Hugging Face here: Hugging Face Inference Endpoints.

A key part of this partnership is the NeMo Inference Microservices (NIM) framework, which NVIDIA developed and is a major focus of the company going forward. This framework helps fine-tune how large language models are deployed and scaled in a serverless environment at both startup and enterprise scale.

Why It Matters

This collaboration is a big deal because it shows how serverless inference is evolving. With NVIDIA and Hugging Face working together, it’s now easier for companies to use advanced AI models without having to invest heavily in infrastructure or deal with the tricky parts of scaling. They also have the opportunity to use the latest hardware technology directly from NVIDIA itself in combination with absolute bleeding edge of AI at Hugging Face.

Innovative Serverless Startups in 2024

Beam.cloud

Beam.cloud is another big name making waves in the serverless inference world. This open source platform is designed to simplify how developers deploy AI models, allowing them to sidestep the tricky parts of hosting infrastructure.

- Simplicity and Ease of Use: Beam is designed with simplicity in mind. It offers an intuitive, user-friendly interface that makes deploying AI models straightforward, even for those without deep technical expertise. This is especially beneficial for smaller teams or startups that need to get their models up and running quickly without getting bogged down in complexity.

- CI/CD Integration: For teams that use continuous integration and continuous deployment (CI/CD) pipelines, Beam.cloud is easy to integrate. It’s designed to work smoothly with these workflows, helping you push updates quickly and reliably. This means you can keep your AI models up to date without missing a beat.

Inference.ai

Inference.ai is designed to offer cost-effective AI deployment solutions, paired with reliable support, making it the go-to platform for developers seeking value without compromising on quality.

- Efficient, Cost-Effective Deployment: Inference.ai operates on a model that emphasizes efficiency and cost-effectiveness. By offering a flexible pricing structure, you only pay for the compute power you actually use. This pay-as-you-go approach ensures that you’re not wasting money on unused resources, making it a smart choice for projects of any size, especially those with varying workloads.

- Advanced Features for Secure and Scalable Solutions: With support for advanced features like secure handling of sensitive data and the ability to manage complex storage needs, Inference.ai is built for projects that require both scalability and security. These features ensure that your AI models can handle real-world demands while keeping data protected.

Koyeb

Koyeb stands out in the serverless space by offering robust support for a wide range of development environments, including Python, Go, Node.js, Java, Scala, and Ruby. This versatility makes it an ideal platform for teams working across different languages, allowing seamless deployment through Git or Docker.

- Optimized for High-Performance AI Workloads: Koyeb’s use of Firecracker microVMs on bare metal servers provides a unique advantage for AI workloads. These microVMs offer the isolation and security of traditional VMs while delivering the reduced latency and density typically associated with containers.

- Secure and Scalable AI Pipelines: For complex AI inference tasks, Koyeb delivers more than just processing power. Its built-in service mesh facilitates secure, encrypted communication between services and microservices, crucial for maintaining data integrity and security as your AI models advance through multiple stages, ensuring safe and scalable deployments.

What's Next for Serverless Inference?

Serverless inference represents a significant shift in how AI models are deployed and scaled, offering developers a more streamlined and cost-effective approach to infrastructure management. By abstracting away the complexities of server management, solo and fast moving developers can focus on model design and customer experience, speeding up the deployment process and reducing their operational overhead. There certainly are customer facing challenges such as cold start latency and overall resource limitations which can cause crashes or lost data. This is to be expected however as the space is rapidly changing and what is true one month may be old news the next.

This serverless approach democratizes access to powerful AI tools and empowers smaller teams and startups to innovate without the burden of heavy infrastructure costs. As AI changes quickly, serverless inference for AI applications are likely to become a best practice in the AI deployment playbook, enabling more agile and scalable solutions for a wide range of applications from startup MLOps all the way up to financial and healthcare enterprise deployments.